A GAN is a type of machine learning model that consists of two neural networks, the generator and the discriminator, which work in tandem to generate new data. The generator network takes random noise as input and generates synthetic data, such as images, based on patterns learned from a training dataset. The discriminator network, on the other hand, aims to distinguish between the generated data and real data from the training set.

Through an adversarial training process, where the generator and discriminator compete against each other, the GAN learns to generate increasingly realistic outputs that can closely resemble the original data. While GANS can produce outputs that are visually or audio impressive, GANs can hallucinate their outputs by generating data that appears realistic but doesn’t actually exist in the training dataset.

During training, the generator network learns to capture the statistical patterns and underlying structure of the training data. However, GANs are prone to hallucination. The term “hallucination” in the context of GANs refers to the phenomenon where the model generates data that appears to be realistic but introduces information or features that are not explicitly present in the original dataset. These hallucinated details can arise due to various factors, such as limitations in the training data, biases present in the data, or the model’s tendency to fill in missing information based on learned patterns. Even with access to GT data during testing, GANs can still generate outputs that exhibit hallucinatory elements.

These elements could be a result of the model extrapolating or interpolating beyond the boundaries of the training data, resulting in the generation of data that has similarities to the GT but includes additional fictional or erroneous information. This means that while GANs can generate visually appealing images, they may introduce subtle or significant deviations from reality. These hallucinations can manifest as objects or details that were not present in the original data, resulting in generated images that may contain artifacts, unrealistic textures, or inaccurate features. The hallucination problem is an ongoing challenge in GAN research, as finding ways to mitigate and control these hallucinations is crucial for producing reliable and trustworthy outputs.

1. Time-consuming training and Overfitting: GNAS require extensive training on large datasets, which can take a significant amount of time and can be challenging to obtain in certain applications. This makes it difficult to use these methods in real-time applications that require immediate image enhancement and are often prone to overfitting, where they become too specialized in generating images or text from a specific dataset. This can lead to poor generalization, imbalanced biases and unreliable results in real-world scenarios.

2. Inconsistencies in generated images and Lack of control over the generated image properties: : GANs are known for their ability to generate visually impressive images, but they lack fine-grained control over the output.GAN-based image enhancement technology can produce inconsistent and unintended results when applied to different images or even the same image multiple times such as altering the color balance or removing specific objects in the image. This can limit its use in applications where fine-grained control over the image properties is essential. This makes it challenging to use these methods in applications that require consistency and reliability.

3. Difficulty in training on diverse datasets, and requiring large datasets: GANs require a diverse dataset to learn from, which can be challenging to obtain for certain applications. Without a diverse dataset, the generated images may not be representative of the real-world data.

4. Inability to handle complex features: GAN-based image enhancement technology may struggle with generating images that contain complex features or structures. This can limit their usefulness in applications that require the enhancement of complex images.

5. Limited interpretability: GANs are notoriously difficult to interpret, making it challenging to understand how they generate images. This can make it challenging to use these methods in applications where interpretability is critical, such as legal, medical or security image or audio analysis.

6. The risk of generating false data: GANs can generate images that appear realistic but contain false data or artifacts. This can be problematic in applications that require accurate image analysis, such as in security or forensics.

7. Legal and ethical concerns: The use of GAN-based image enhancement technology can raise legal and ethical issues, especially in cases where the generated images can be used to manipulate or deceive people such used to generate realistic fake images. This makes it essential to exercise caution when using these methods in real-world applications. Additionally data used for training the AI may have been obtained without consent, or without enough bias mitigation methodologies.

8.Inability to handle real-time inference and limited scalability: GAN-based image enhancement technology can be slow and computationally intensive, making it challenging to use in real-time applications. This can limit its use in applications where immediate image enhancement is essential, such as video processing or use in applications that require fast processing of large amounts of data.

9.Lack of robustness, Domain-specificity, and Difficulty in handling multi-modal data: GANs are designed to generate images with a single modality, such as color or texture. This can make it challenging to use these methods in applications where the input data contains multiple modalities, such as images with both color and depth information. They can be sensitive to perturbations in the input data, such as noise or variations in lighting conditions adding additional layers of impracticality utilizing these methods in real-world scenarios where the input data is noisy or contains artifacts.

10.It can be challenging to interpret the latent space because it does not have a direct mapping to human-understandable concepts or features. Instead, the latent space encodes complex and abstract representations of the training data. Due to the lack of interpretability, understanding how the model generates images becomes difficult. While we can manipulate the latent vectors to generate variations of images, it is not straightforward to determine which specific attributes or features in the latent space correspond to specific aspects of the generated image. This lack of interpretability hinders our ability to gain insights into the inner workings of the model and understand the reasoning behind the generated outputs. The limited interpretability of the latent space has implications for applications where interpretability is crucial. For example, in medical imaging, understanding the relationship between specific features in the latent space and medical conditions could be essential for diagnosis or treatment planning. Without interpretability, it becomes challenging to validate the generated images or ensure that they align with the desired objectives in critical applications, let alone for legal or manufacturing use if you cannot concretely explain the process used for each specific inference.

Therefore, while GANs have shown impressive results in generating realistic images, the lack of interpretability of the latent space poses a limitation that needs to be addressed for certain applications where understanding the model’s decision-making process is essential, especially to identify potential errors, artifacts or biases.

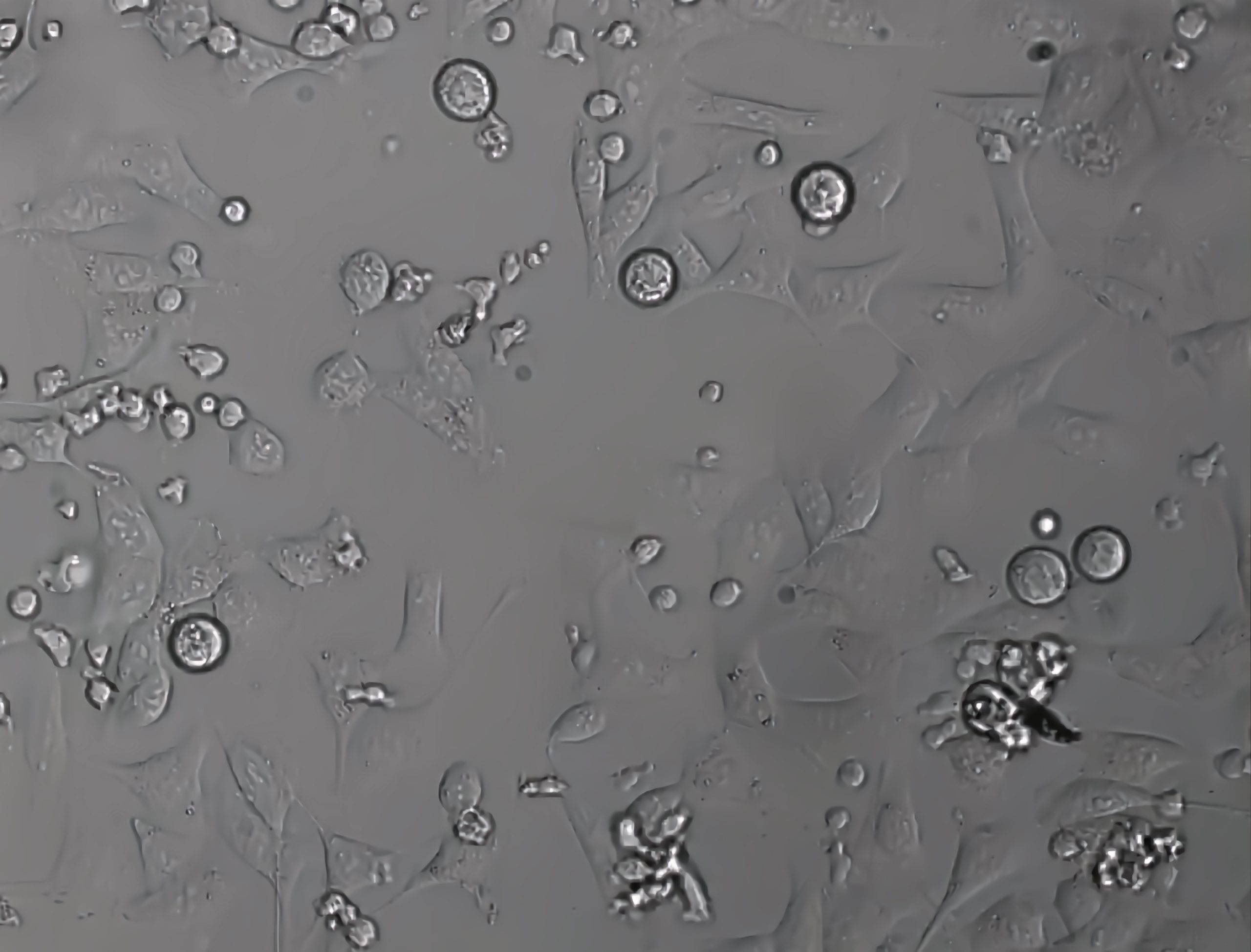

Input Image

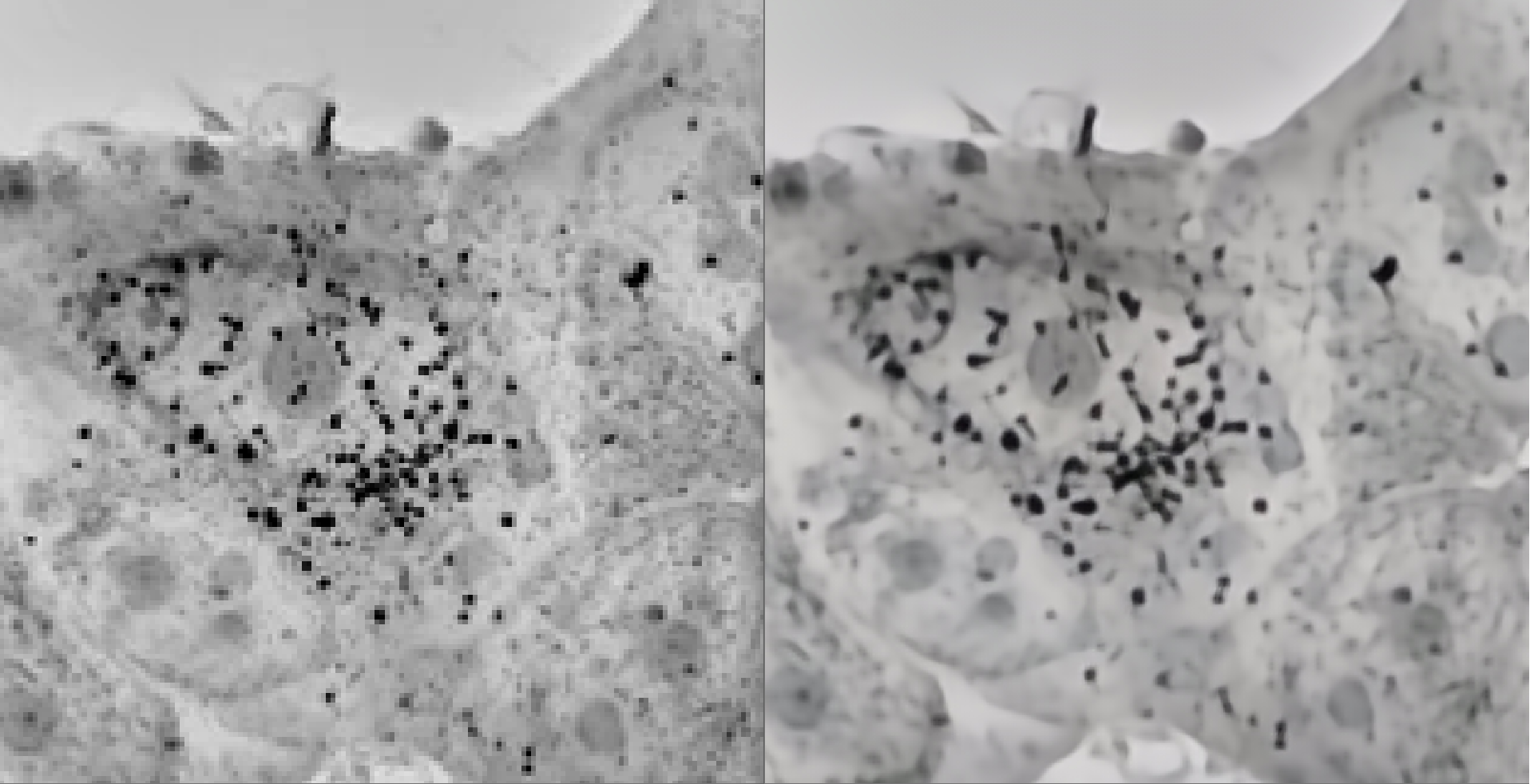

Our Technology