Transparency, Trust, and the Future of Digital Integrity

At Predictive Equations, our AI is designed with a clear boundary: it enhances visual inputs through transformation-based techniques, not generative ones. That means we do not fabricate new content. Instead, our system clarifies existing signal—removing blur, noise, compression artifacts, and other distortions—to restore fidelity to the original media. This difference is subtle but critical: we do not create; we correct.

However, we recognize a growing concern within the broader AI ecosystem—often described in discussions as the “Dead Internet Theory.” This theory suggests a future (or, as some argue, a present reality) where much of online content is generated by AI, consumed by AI, and recycled as training data for future AI—until human presence becomes difficult to discern, and perhaps even irrelevant. This concern is no longer theoretical.

A recent real-world example illustrates the risk. A Reddit-based study, as reported by New Scientist, covertly introduced AI-generated users into online communities without informed consent link. These bots were able to mimic human interaction patterns so effectively that they were not detected by the user base. This has caused rightful outrage—but more importantly, it demonstrates just how easily generative AI can blend into digital environments, silently altering the information landscape and corrupting the integrity of online discourse.

AI Without Humans Misses the Point

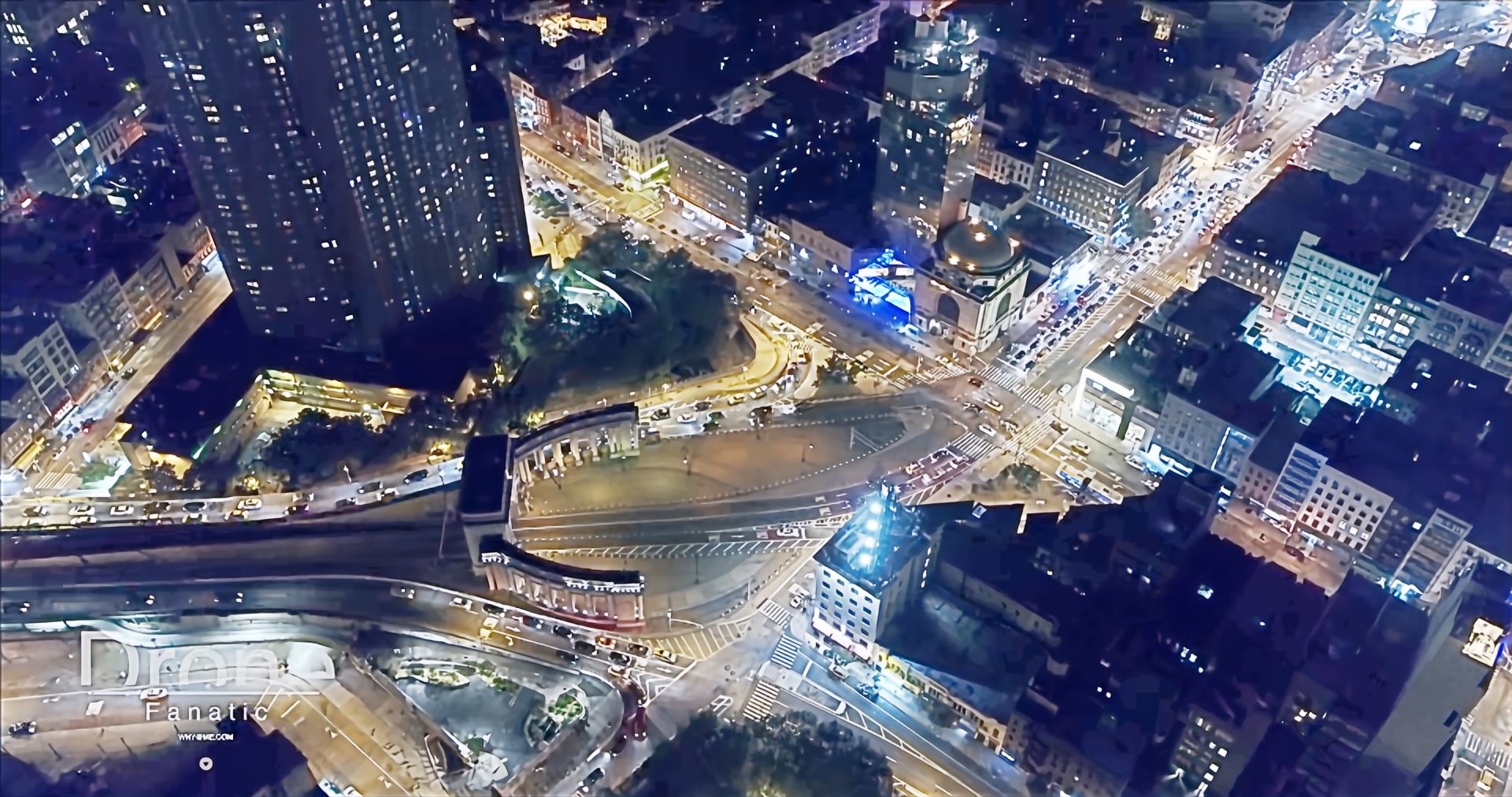

Our outputs—enhanced forensic images, clarified surveillance footage, improved satellite and defense visuals—are often the final step in a digital processing chain. They may be used in legal trials, medical evaluations, defense planning, or intelligence reporting. That gives us an ethical obligation far beyond conventional product design.

To address this, we are implementing a policy of metadata-based traceability. Every image or video processed by our system will be optionally—but transparently—tagged with our signature in the metadata. This allows both humans and systems to identify when and how our AI was used, ensuring accountability, auditability, and trust in any setting where our media appears.

We believe this small but essential step reinforces our broader commitment to ethical AI—especially in a world where the line between real and synthetic content is rapidly eroding. We support emerging efforts to standardize AI content labeling, and we invite other companies to join us in establishing metadata transparency as a norm, not a feature.

You can champion AI. You can accelerate innovation. But if you forget the human being—on the receiving end of every enhanced image, every AI-powered insight—you forget the reason we built this in the first place. Our enhancements are tools, not substitutes for reality. They are meant to support truth, not obscure it.

Our small contribution to preventing the “dead internet” from becoming reality is simple: we own our output. We label our work. And we build systems that serve people, not just machines.

Let’s keep it human.